Pretrained fastText embeddings are great. They were trained on a many languages, carry subword information, support OOV words.

But their main disadvantage is the size. Even compressed version of the binary model takes 5.4Gb. This fact makes it impossible to use pretrained models on a laptop or a small VM instances.

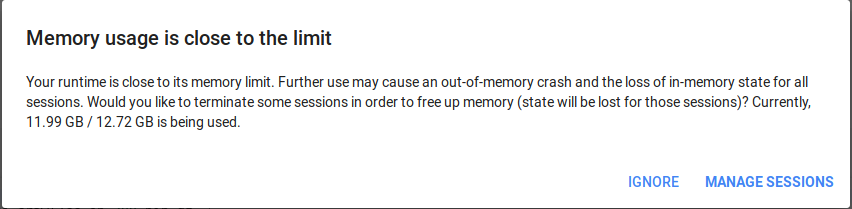

Being loaded into RAM, this model takes even more memory ~ 16Gb. So you can’t use it directly with Google Colab, which only gives you 12 GB of RAM.

There are two main reasons why binary models weight so much. The first one is that binary model carries not only weights for words and n-grams, but also weights for translating vectors back to words. These vectors are also called negative vectors and are used for additional training of the model.

The second reason is that word and n-gram matrices are very large, they contain 2M vectors each. This is more than enough for most practical applications.

Let’s deal with both of this problems.

The easiest part is to just drop trainables. One way to do this is to convert the native fastText format into Gensim representation.

from gensim.models import FastText

# Original fasttext embeddings from https://fasttext.cc/

ft = FastText.load_fasttext_format("fasttext_embedding.bin")

# we are not saving trainables here

ft.wv.save('fasttext_gensim.model')

That is easy. Now instead of 16Gb of RAM, our model will take only 10Gb.

Let’s go further.

There are two embedding matrices in the fastText model: vocab matrix and n-gram matrix. The first one is simple, it just holds the embedding vector for each word in the vocabulary. Vocabulary is also presented and is sorted by frequency, so the only thing we need to do is to take the first N rows from this matrix and remove infrequent words from the vocabulary. You may find a reference to my implementation at the bottom of this article.

The second matrix is more tricky.

It does not hold a direct mapping of n-grams to vectors inside this matrix. Instead of it, the fastText model performs hashing trick: It calculates n-gram hashes and maps its remainder of the division by matrix size. This trick allows having some vector representation for any n-gram with a little collision probability.

So to shrink this matrix we will need to re-map old indexes to new ones. And here comes two things we need to decide:

- How to resolve collisions.

- How to choose a new matrix size.

The answer to the first question is more practical than theoretical. I have just tested several variants and came up with the decision to use a simple weighted average of collided n-grams, where the weights are derived from n-gram frequency.

The second answer may be not so intuitive like the first one. At first glance, you might think that the larger the new matrix is, the more information we preserve. But it is not true.

The loss of information depends not only on the total number of collisions but also on the range of variants this collision has to be resolved on.

Imagine we have 10 collisions among 2 n-grams each and a single collision among 20 n-grams. In the first case, we can save half of the information and only 1/20 in the second one.

This means that we are also interested in the uniform distribution of collisions.

We can achieve this uniformity if the size of the smaller matrix has a larger GCD with the original size.

In this case, the amount of n-grams candidates for a single id will not exceed old_size / GCD(old_size, new_size)

Thereby it would be efficient to compress 2M matrix into 1M, 500K or 250K.

To ensure that new n-gram matrix is not broken, we may need to estimate quality. One way to do it is to measure the similarity between vocab vectors of the original model and reconstructed from n-grams vectors of a new model.

test_vocab_size = 100_000

test_vocab = sorted_vocab[new_vocab_size:new_vocab_size + test_vocab_size]

sims = []

for test_word, _ in test_vocab:

sim = ft.cosine_similarities(

ft.get_vector(test_word),

[new_ft.get_vector(test_word)]

)

if not np.isnan(sim):

sims.append(sim)

print("Similarity:", np.sum(sims) / test_vocab_size)

The closer similarity value is to 1, the more information is preserved.

Here is a comparison of a resulting similarity with different params.

+-----------------+----------------+------------+

| New matrix size | Resolve method | Similarity |

+-----------------+----------------+------------+

| 500000 | max | 0.834 |

| 500000 | avg | 0.858 |

| 750000 | max | 0.749 |

| 750000 | avg | 0.789 |

| 800000 | max | 0.807 |

| 800000 | avg | 0.837 |

| 1000000 | max | 0.931 |

| 1000000 | avg | 0.941 |

+-----------------+----------------+------------+

Notice, that similarity of the models with smaller GCD is smaller, just like we expected.

Finally

-

We obtained a model which takes only 2Gb of RAM and 94% similar to the original model.

-

Check out the full implementation on my Gist.