Neural networks achieved great success at various NLP tasks, however, they are limited at handling infrequent patterns. In this article, the problem is described in the context of machine translation task.

The authors noted that NMT is good at learning translation pairs that are frequently observed, but the system may ‘forget’ to use low-frequency pairs when they should be. In contrast, in traditional rule-based systems, low-frequency pairs cannot be smoothed out no matter how rare they are. One solution to this is combining both approaches.

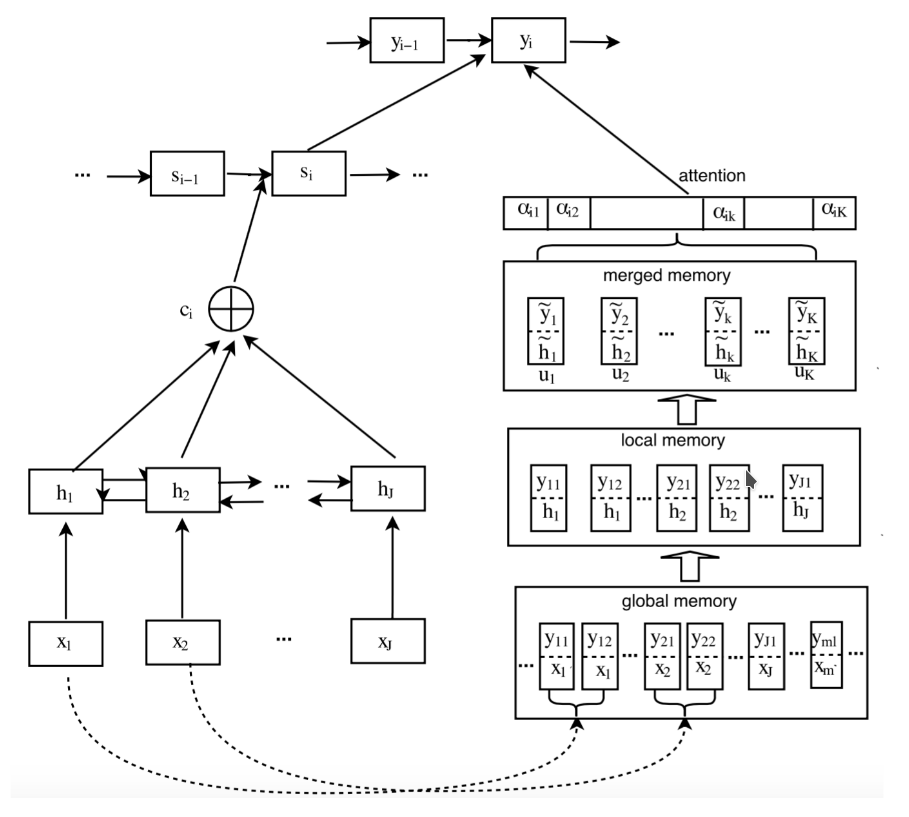

The authors propose to use a large external memory along with a selection mechanism to allow NN to use this memory. Selection fetches all relevant translation variants using words in source sentence and then an attention mechanism selects among these variants. After that neural net decides what source of prediction should be used on each translation step.

The important thing is that the vectors for external memory were trained separately. That basically means that we can build knowledge bases for neural nets. That seems like a promising way to construct really large-scale models with huge capacity.